On February 19th, the European Commission disclosed that it has officially begun an investigation into ByteDance's TikTok under the Digital Services Act (DSA) to assess whether the social media platform has implemented sufficient measures to safeguard children.

The TikTok DSA investigation centers on potential infringements of online content regulations, especially those related to child protection and transparent advertising practices. This intensifies the rigorous regulatory scrutiny already placed on TikTok, sparking concerns regarding data privacy, the platform's potential influence on users, and its implications for the Chinese government.

Driving Factors Behind the TikTok DSA Investigation

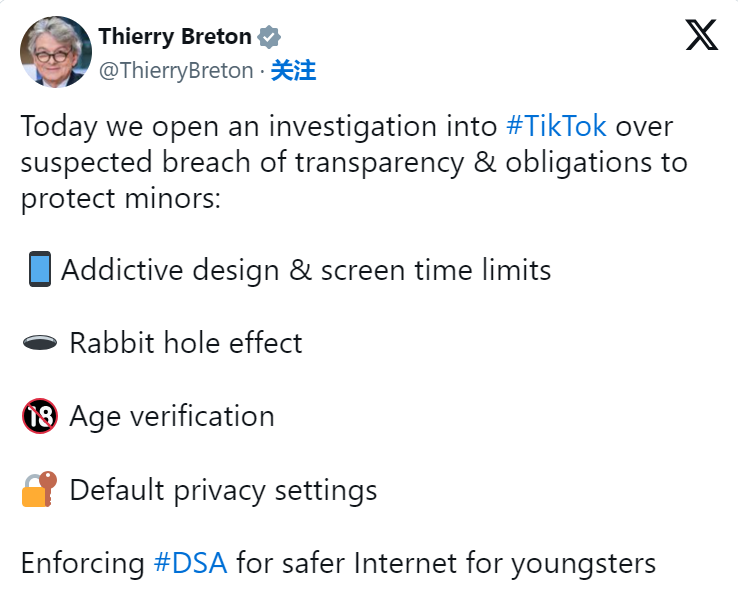

EU Commissioner Thierry Breton emphasized, "Protecting minors is a paramount objective of the DSA.

As a platform engaging millions of children and teenagers, TikTok is obligated to adhere fully to the DSA, taking a pivotal role in the protection of online minors." The initiation of the TikTok DSA probe followed the evaluation of TikTok's risk assessment and responses to EU queries, focusing on key areas:

- Minor Protection: The EU's probe into TikTok will scrutinize the platform's preventative measures against potentially addictive designs, the "rabbit hole" effect leading to harmful content, and age verification procedures for preventing access by children under 13.

- Advertising Transparency: The investigation seeks to verify if TikTok complies with EU regulations by transparently identifying all advertising and sponsored content shown to users.

- Compliance with the Digital Services Act (DSA): The EU's stringent enforcement of the DSA compels major online platforms to proactively remove illegal content and reduce societal risks.

Potential Implications of the TikTok DSA Investigation

The investigation could have dire consequences for TikTok. Violations of EU regulations might result in fines of up to 6% of its global annual revenue, significantly impacting the company. More severe outcomes could include operational restrictions within the EU, effectively limiting its services, and, in the most extreme cases, a comprehensive EU ban similar to those imposed in other countries.

In response, TikTok stated that it has already implemented features and settings to protect teenagers and prevent the use of the platform by children under 13. A spokesperson highlighted, "Addressing this issue is an industry-wide effort. We are committed to working with experts and the industry to ensure the safety of teenagers on TikTok and are eager to explain our efforts in detail to the Commission."

Last year, TikTok updated many product features to align with DSA requirements and removed 4 million videos deemed illegal or harmful by the EU. In November, the European Commission requested TikTok and YouTube to outline the measures taken to shield minors from illegal and harmful content on their platforms in accordance with DSA regulations.

Digital Services Act (”DSA”)

Passed in October 2022 and implemented in August 2023 for 19 major internet platforms.

The DSA safeguards fundamental rights like online speech freedom and sets up protections against unwarranted account suspensions or arbitrary content deletions. Aimed at balancing the dominance of tech giants, the DSA encourages fair competition, offering opportunities for small businesses and startups in the online marketplace. It strengthens accountability, requiring online platforms and search engines to transparently explain content removal, recommendations, and advertising decisions.

The DSA's goal is to foster more accountable online platforms, emphasizing:

- Protecting users from illegal content, goods, and services through intermediary services like social media and marketplaces.

- Granting the European Commission and member states access to major online platforms' algorithms for supervision and demanding swift illegal content removal.

- Establishing clearer "notice and action" procedures for rapid response to illegal content reports by users.

- Mandating online marketplaces to ensure information reliability through enhanced checks and random inspections.

- Enhancing protection for victims of online violence, especially against non-consensual content sharing.

- Penalizing non-compliant online platforms and search engines with fines up to 6% of their global turnover, among other sanctions.

- Implementing new transparency obligations to inform users about content recommendation processes and restrict sensitive data use in online advertising.

- Prohibiting targeted advertising to minors and shielding them from harmful content.

- Imposing stricter obligations on very large online platforms to assess and mitigate systemic risks, including independent audits.

- Allowing the Commission to mandate emergency measures from very large platforms during crises, limited to three months.

Christel Schaldemose, the European Parliament rapporteur, stated that the DSA would establish new global standards, empowering citizens to better control how their data is used by online platforms and large tech companies, ensuring legality online mirrors offline. The act's focus on algorithm transparency and misinformation also aims to expand user choices and limit targeted advertising.